As the last part of the previous post-series about MongoDB and Twitter I’m about to show some plots about an initial speed comparison of the two DBs. As a result, these plots show how MongoDB can perform better than traditional SQL solutions if it comes to speed. Of course the overall picture is more sophisticated. In these cases I focused on the simplest approach possible – retrieving documents from Mongo and rows from Postgre.

If a MongoDb collection and a Postgre table contain similar (or identical) data then a comparison in terms of speed would make sense. I wrote a python script which measures query execution times both in Postgre and MongoDB an then creates arrays of the execution time of each individual query. The script runs every query 100 times after each other. The script access the databases by using two python APIs, PyMongo for Mongo and psycopg2 for PostgreSQL. In this case, these times will represent initial results, because both DBs and queries should be optimized with appropriate, indexed fields.

Queries will result cursors, so the script will iterate through them and call a method named do_nothing() which literally does nothing but returns with no value.

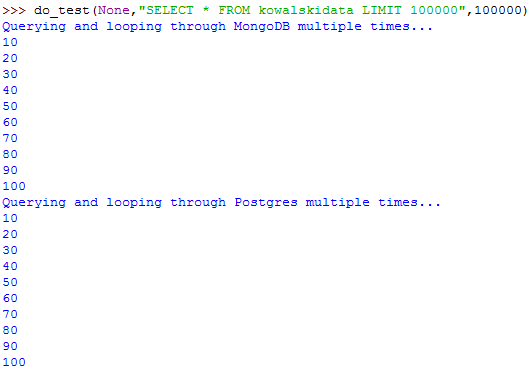

Our first query should represent a case where we want to grab all the documents and rows from the DBs. We pass None as a query paramter for the find() method, and also a script will limit results to 100000 on the mongo collection. As for Postgre, we pass “SELECT * FROM table LIMIT 100000”.

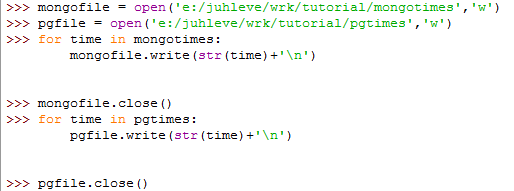

Once tests are finished, we have 2 lists named mongotimes and pgtimes each with 100 entries representing the execution times of each query over 100 realization. Let’s write them to file and import them to R in order to create some nice plots.

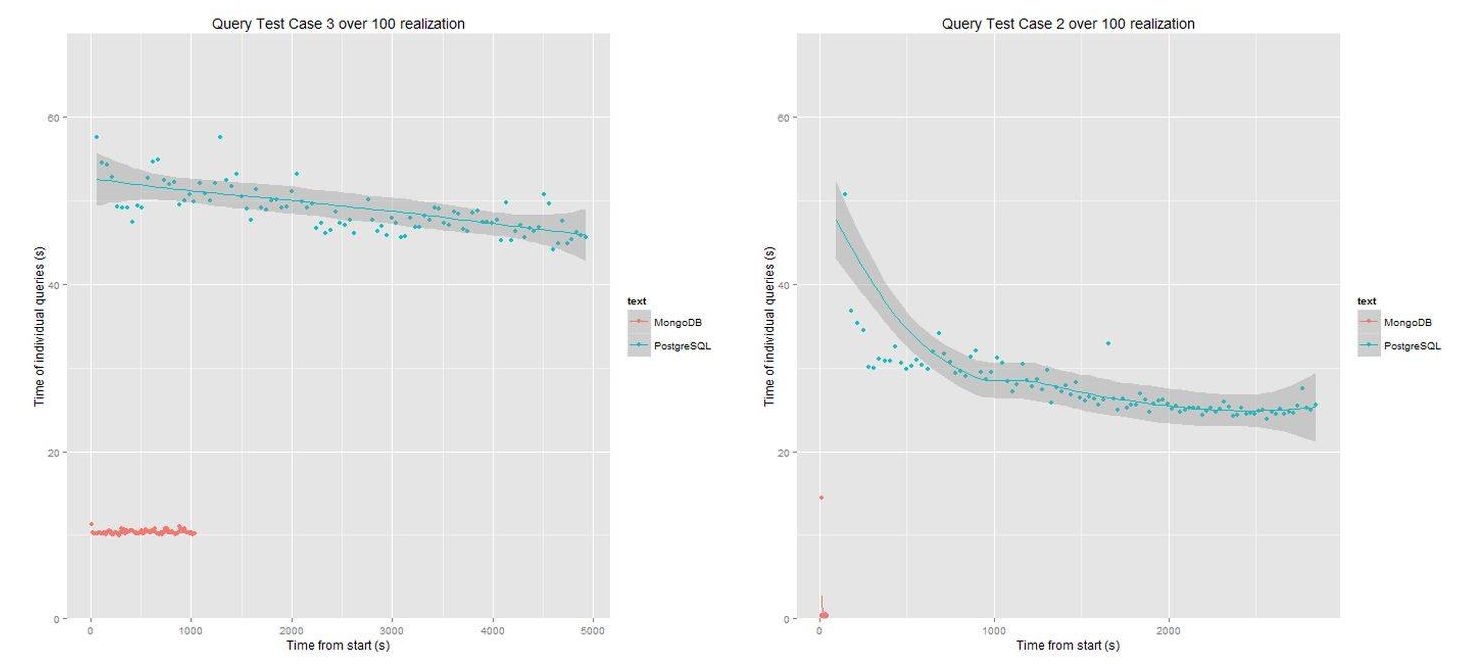

This was the first case. Now, we should repeat the above procedure a few times with different queries in order to get a sense of the performance. Use the following. Let the second case be ({‘text’: {‘$regex’: ‘weather’}}) with a limit of 1000 for MongoDB, and the equivalent SQL syntax, SELECT * FROM table WHERE “text” LIKE ‘%weather%’ LIMIT 1000; for Postgre. Third case should be the following: MongoDB: ({‘created_at’: {‘$gt’: ‘2014-06-18 10:00:00’, ‘$lte’: ‘2014-06-18 12:33:44’}}) PostgreSQL: SELECT * FROM table WHERE “created_at” > ‘2014-06-18 10:00:00’ AND “created_at” < ‘2014-06-18 12:33:44’;

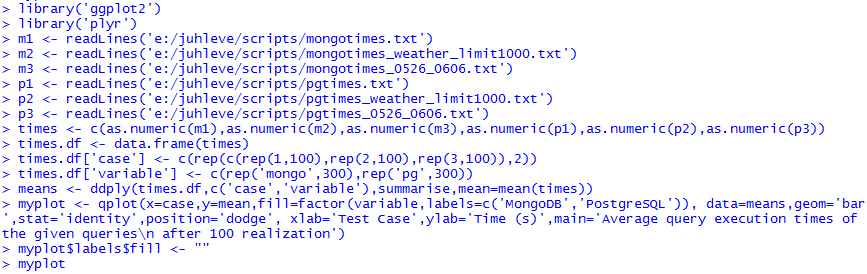

- case: First 100.000 documents/rows from the 2 DBs

- case: First 1.000 tweets that contain the weather keyword

- case: All tweets between 10.00 and 12.33 18 June

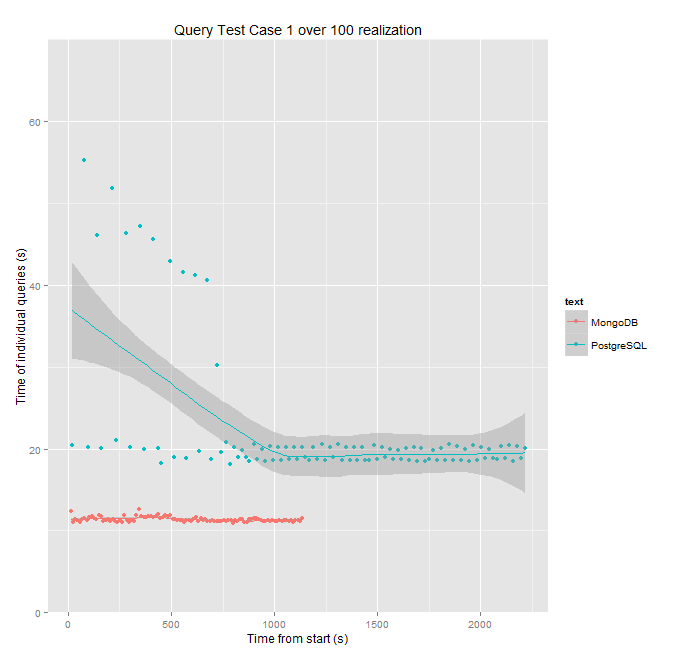

Now, we have to import the times to R and see what we can do with it. We will use ggplot2 package to create nice looking plots and since ggplot2 works well with data frames, we have to create them first. We won’t write a script for this part. We will work with the R shell.

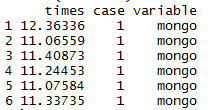

First, we import the text files that we have just written out to file from Python (file names are different here, because I did not want to rerun tests, so I used the old ones). Then we fill a data frame with the time like this.

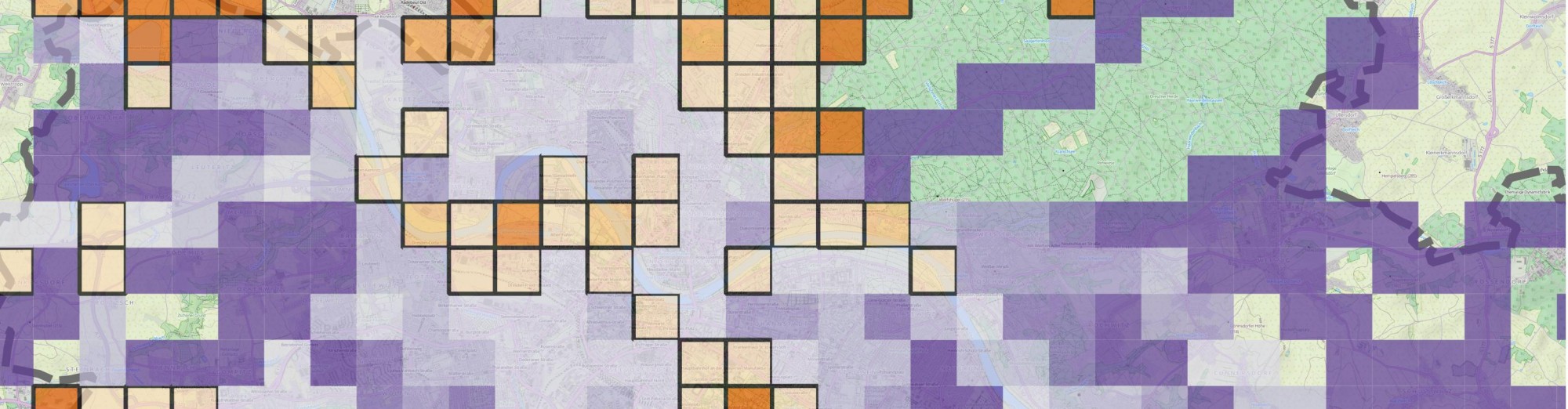

We have 600 rows for each individual query. Each row contains the execution time in seconds, the test case and the database name. Once we have this data frame, we can compute the average time for each test case and for each DB and we can plot it with ggplot2. The result should look like this:

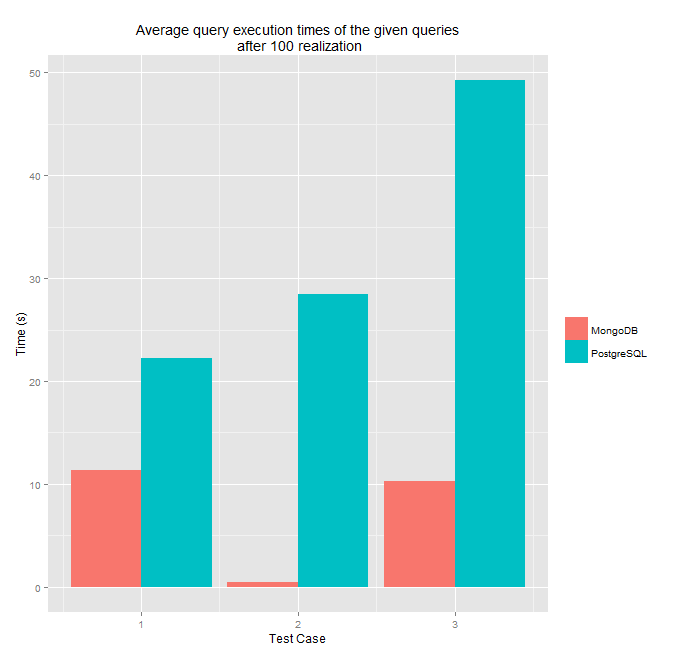

One can make a lot of plots while playing with ggplot2 in R. Here are some other results from the same dataset.

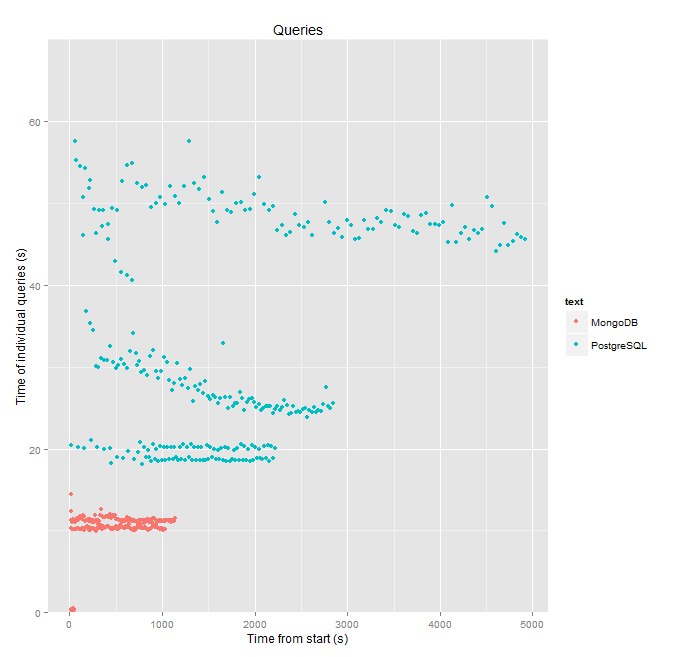

Or here are the test cases individually:

I’m not going into details about how to analyze these plots. You can use your imagination but it is clear that using MongoDB was much faster in this case. Also, you can notice a bigger range of the execution times of Postgre.

Of course, these were the simplest queries possible but sometimes you just want to get what you’ve put into the DB. If you want to get the documents that you’ve put into the DB and there’s no need for joins and other stuff like that you might want to consider using a NoSQL solution.

“Postgres Outperforms MongoDB and Ushers in New Developer Reality”

compared MongoDB v2.6 to Postgres v9.4 beta

http://blogs.enterprisedb.com/2014/09/24/postgres-outperforms-mongodb-and-ushers-in-new-developer-reality/

I’ve heard earlier this year that Postgre 9.4 will be a serious competitor in terms of speed. Even mentioned in this post: http://blog.jlevente.com/twitter-data-analysis-from-mongodb-part-1-introduction/ It seems like it must be true. Thanks for sharing, gotta try it out soon! :)